Python’s versatility, intuitive syntax, and extensive libraries empower professionals to construct agile pipelines that adapt to evolving business needs. Python seamlessly automates workflows, manages complex transformations, and orchestrates smooth data movement, creating a foundation for efficient and adaptable data processing in diverse domains.

Data Pipelines in Python

A data pipeline is a set of automated procedures that facilitate the seamless flow of data from one point to another. The primary objective of a data pipeline is to enable efficient data movement and transformation, preparing it for data analytics, reporting, or other business operations.

Python is widely used in the creation of data pipelines due to its simplicity and adaptability. A data pipeline in Python is a sequence of data processing elements, where each stage takes data from the previous stage, performs a specific operation, and passes the output to the next stage. The primary objective is to extract, transform, and load (ETL) data from various sources and in various formats into a single system where it can be analyzed and viewed together.

Python data pipelines are not limited to ETL tasks. They can also handle complex computations and large volumes of data, making them ideal for:

- Data cleaning

- Data transformation

- Data integration

- Data analysis

Python’s simplicity and readability makes these pipelines easy to build, understand, and maintain. Furthermore, Python offers several frameworks like Luigi, Apache Beam, Airflow, Dask, and Prefect, which provide pre-built functionality and structure for creating data pipelines, which can speed up the development process.

Key Advantages of Building Data Pipelines in Python

- Flexibility: Python’s extensive range of libraries and modules allows for a high degree of customization.

- Integration Capabilities: Python can seamlessly integrate with various systems and platforms. Its ability to connect to different databases, cloud-based storage systems, and file formats makes it a practical choice for constructing data pipelines in varied data ecosystems.

- Advanced Data Processing: Python’s ecosystem includes powerful data processing and analysis libraries like Pandas, NumPy, and SciPy. These libraries allow for complex data transformations and statistical analyses, enhancing the data processing capabilities within the pipeline.

Python Data Pipeline Frameworks

Python data pipeline frameworks are specialized tools that streamline the process of building, deploying, and managing data pipelines. These frameworks provide pre-built functionalities that can handle task scheduling, dependency management, error handling, and monitoring. They offer a structured approach to pipeline development, ensuring that the pipelines are robust, reliable, and efficient.

Several Python frameworks are available to streamline the process of building data pipelines. These include:

- Luigi: Luigi is a Python module for creating complex pipelines of batch jobs. It handles dependency resolution and helps in the management of a workflow, making it easier to define tasks and their dependencies.

- Apache Beam: Apache Beam offers a unified model that allows developers to construct data-parallel processing pipelines. It caters to both batch and streaming data, providing a high degree of flexibility. This adaptability makes Apache Beam a versatile tool for handling diverse data processing needs.

- Airflow: Airflow is a systematic platform that defines, schedules, and monitors workflows. It allows you to define tasks and their dependencies and takes care of orchestrating and monitoring workflows.

- Dask: Dask is a versatile Python library designed to perform parallel computing tasks with ease. It allows for parallel and larger-than-memory computations and integrates well with existing Python libraries like Pandas and Scikit-Learn.

- Prefect: Prefect is a modern workflow management system that prioritizes fault tolerance and simplifies the development of data pipelines. It provides a high-level, Pythonic interface for defining tasks and their dependencies.

How to Build Python Data Pipelines: The Process

Let’s examine the five essential steps of building data pipelines:

1. Installing the Required Packages

Before you start building a data pipeline using Python, you need to install the necessary packages using pip, Python’s package installer. If you’re planning to use pandas for data manipulation, use the command “pip install pandas”. If you’re using a specific framework like Airflow, you can install it using “pip install apache-airflow”.

2. Data Extraction

The first step is to extract data from various sources. This can involve reading data from databases, APIs, CSV files, or web scraping. Python simplifies this process with libraries like ‘requests’ and ‘beautifulsoup4’ for web scraping, ‘pandas’ for CSV file reading, and ‘psycopg2’ for PostgreSQL database interaction.

3. Data Transformation

Once the data is extracted, it often needs to be transformed into a suitable format for analysis. This can involve cleaning the data, filtering it, aggregating it, or performing other computations. The pandas library is particularly useful for these operations. Notably, you can use `dropna()` to remove missing values or `groupby()` to aggregate data.

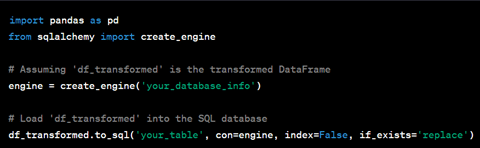

4. Data Loading

After the data has been transformed, it is loaded into a system where it can be analyzed. This can be a database, a data warehouse, or a data lake. Python provides several libraries for interacting with such systems, including ‘pandas’ and ‘sqlalchemy’ for writing data to an SQL database and ‘boto3’ for seamless interaction with Amazon S3 in the case of a data lake on AWS.

5. Data Analysis

The final stage is analyzing the loaded data to generate insights. This can involve creating visualizations, building machine learning models, or performing statistical analysis. Python offers several libraries for these tasks, such as `matplotlib` and `seaborn` for visualization, `scikit-learn` for machine learning, and `statsmodels` for statistical modeling.

Throughout this process, it’s important to handle errors and failures gracefully, ensure data is processed reliably, and provide visibility into the state of the pipeline. Python’s data pipeline frameworks, such as Luigi, Airflow, and Prefect, provide tools for defining tasks and their dependencies, scheduling and running tasks, and monitoring task execution.

The No-Code Alternative to Building Python Data Pipelines

Python, while offering a high degree of flexibility and control, does present certain challenges:

- Complexity: Building data pipelines with Python involves handling various complex aspects such as extracting data from multiple sources, transforming data, handling errors, and scheduling tasks. Implementing these manually can be a complex and time-consuming process.

- Potential for Errors: Manual coding can lead to mistakes, which can cause data pipelines to fail or produce incorrect results. Debugging and fixing these errors can also be a lengthy and challenging process.

- Maintenance: Manually coded pipelines often require extensive documentation to ensure they can be understood and maintained by others. This adds to development time and can make future modifications more difficult.

The process of building and maintaining data pipelines has become more complex. Modern data pipeline tools are designed to handle this complexity more efficiently. They offer a level of flexibility and adaptability that is difficult to achieve with traditional coding approaches, making data management more inclusive, adaptable, and efficient

While Python remains a versatile choice, organizations are increasingly adopting no-code data pipeline solutions. This strategic shift is driven by the desire to democratize data management, foster a data-driven culture, ensure data governance, and streamline the pipeline development process, empowering data professionals at all levels.

Advantages of Using No-Code Data Pipeline Solutions

Opting for an automated solution for no-code data pipelines presents several advantages such as:

- Efficiency: No-code solutions expedite the process of building data pipelines. They come equipped with pre-built connectors and transformations, which can be configured without writing any code. This allows data professionals to concentrate on deriving insights from the data rather than spending time on pipeline development.

- Accessibility: No-code solutions are designed to be user-friendly, even for non-technical users. They often feature intuitive graphical interfaces, enabling users to build and manage data pipelines through a simple drag-and-drop mechanism. This democratizes the process of data pipeline creation, empowering business analysts, data scientists, and other non-technical users to construct their own pipelines without needing to learn Python or any other programming language.

- Management and Monitoring Features: No-code solutions typically include built-in features for monitoring data pipelines. These may include alerts for pipeline failures, dashboards for monitoring pipeline performance, and tools for versioning and deploying pipelines.

Leveraging Astera’s No-Code Data Pipeline Builder

Astera is a no-code solution that’s transforming the way businesses handle their data. The advanced data integration platform offers a comprehensive suite of features designed to streamline data pipelines, automate workflows, and ensure data accuracy.

Here’s a look at how Astera stands out:

- No-Code Environment: Astera’s intuitive drag-and-drop interface allows users to visually design and manage data pipelines. This user-friendly environment reduces dependency on IT teams and empowers non-technical users to take an active role in data management, fostering a more inclusive data culture within the organization.

- Wide Range of Connectors: Astera comes equipped with pre-built connectors for various data sources and destinations. These include connectors for databases like SQL Server, cloud applications like Salesforce, and file formats like XML, JSON, and Excel. This eliminates the need for complex coding to establish connections, simplifying the data integration process.

- Pre-Built Transformations: Astera provides a wide array of data transformation functions. These include transformations for merging, routing and pivoting/unpivoting among others. These operations enable users to cleanse, standardize, and enrich data as per their business requirements, ensuring that the data is in the right format and structure for analysis.

- Data Quality Assurance: Astera offers advanced data profiling and data quality features. Users can set predefined rules and check data against these rules to ensure its accuracy and reliability. This feature helps maintain data integrity, ensuring that your business decisions are based on high-quality data.

- Job Scheduling and Automation: The platform allows users to schedule jobs and monitor their progress and performance. Users can set up time-based or event-based triggers for tasks, automating the data pipeline process and ensuring the timely execution of data jobs.

Astera’s No Code Platform

Take the first step towards efficient and accessible data management. Download your 14-day free trial of Astera Data Pipeline Builder and start building pipelines without writing a single line of code!

Authors:

Mariam Anwar